We love neural networks! We use them in our phones, our home speaker, our TVs, our cars and our almost everything! We like them when they are correct, and we ignore them when they are wrong. The mercy we show when they are wrong also indicates how much we adore them! After all, it wouldn’t hurt if there are a few dog photos in the search result when you search for cats in your “Google Photos” app, right? But we cannot afford to be always this much forgiving! Sometimes we deploy neural networks in safety-critical applications such as medical diagnosis or self-driving cars. A “simple” mistake here could be catastrophic and we need to avoid that.

If I train a LeNet on MNIST dataset I can get an accuracy higher than %99 on test data. But what if I deploy this network in a real-world setting, like in a post office, to read zip codes? It will obviously see digits different from what it’s used to see, digits from different distributions. For example, it might see a 5, but rotated 90degrees. In this case, our network would predict that it’s an 8 and will predict it confidently. In this setting, our neural network simply “doesn’t know that it doesn’t know” and instead of asking for expert help, it will confidently send the letter to a wrong address and some poor grandmother will never receive her Christmas card! Sad story.

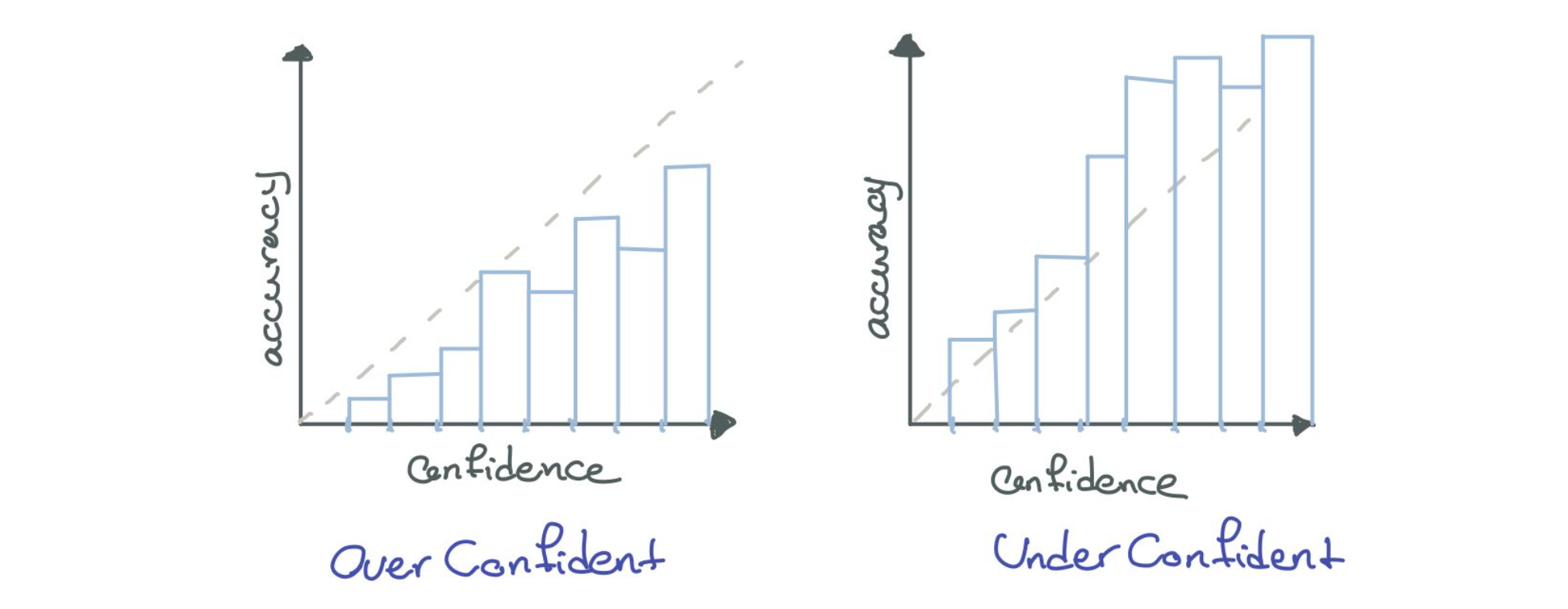

Our ideal neural networks are the types that produce “calibrated uncertainties”. A well-calibrated model should be accurate when it is certain about its prediction and indicate high uncertainty when it is likely to be inaccurate. What do we mean here by uncertainty? In NeurIPS2020 Google AI Brain Team provides a sweet definition. We need neural networks that return distribution over predictions rather than a single prediction. In this case, we can use distribution’s variance directly as an uncertainty estimation in regression tasks or use probability of y given x as our confidence. Good uncertainty estimates quantify when we can trust the model’s predictions, and when we should hand the control to a human expert.

You might think with yourself that in the case of classification, we always have probability estimates after a final softmax layer. Can’t we just use these as our confidence in the model’s prediction? Guo et. al. show that we cannot. They show that almost all modern neural networks are miscalibrated for several reasons, such as NLL overfitting. They provide a simple and intuitive post-hoc calibration method, named temperature scaling, which is applied on a trained network to calibrate its predictions. However, Ovadia et. al. show that this method only works for i.i.d data; for data from the same distribution as the training dataset. This means a small amount of shift from training data could end up in a wrong prediction. So the poor grandmother probably won’t receive her Christmas card even if we apply temperature scaling on our model.

I think we all are convinced now that we need better and more calibrated neural networks. Neural networks capable of outputting an uncertainty of their decisions. In future posts, I am planning to go through various papers and explain methods that are being used for uncertainty prediction, both baseline methods and state-of-the-art ones. But now, I would like to finish this post by the final paragraph of this excellent paper from Intel Labs

As AI systems backed by deep learning are used in safety-critical applications like autonomous vehicles, medical diagnosis, robotics etc., it is important for these systems to be explainable and trustworthy for successful deployment in real-world. Having the ability to derive uncertainty estimates provides a big step towards explainability of AI systems based on Deep Learning. Having calibrated uncertainty quantification provides grounded means for uncertainty measurement in such models. A principled way to measure reliable uncertainty is the basis on which trustworthy AI systems can be built. Research results and multiple resulting frameworks have been released for AI Fairness measurement that base components of fairness quantification on uncertainty measurements of classified output of deep learning models…… This explanation is also critical for building AI systems that are robust to adversarial blackbox and whitebox attacks. These well calibrated uncertainties can guide AI practitioners to better understand the predictions for reliable decision making, i.e. to know “when to trust” and “when not to trust” the model predictions (especially in high-risk domains like healthcare, financial, legal etc). In addition, calibrated uncertainty opens the doors for wider adoption of deep network architectures in interesting applications like multimodal fusion, anomaly detection and active learning. Using calibrated uncertainty as a measure for distributional shift (out-of-distribution and dataset shift) detection is also a key enabler for self-learning systems that form a critical component of realizing the dream of Artificial General Intelligence (AGI).

Comments powered by Disqus.